Introduction

- As the demand for machine learning (ML) systems continues to rise, the importance of testing and quality assurance cannot be overstated.

- ML models are only as good as the data they are trained on and the algorithms that power them. Without proper testing, these models can produce inaccurate or unreliable results, leading to serious consequences in industries such as healthcare, finance, and transportation.

- At Applied AI Consulting, we understand the critical role that testing plays in ensuring the success of ML systems.

- As a leading digital engineering company that specializes in AI solution consulting and implementation, we have developed a comprehensive approach to ML testing that covers pre-train, post-train, and production stages.

- Try aiTest for testing any application UI or API or creating automation suite on the fly.

- In this blog, we will summarize our ML testing framework and share some best practices for ensuring accuracy and reliability in ML systems.

Level 1: Smoke Testing

- Smoke testing is a quick and simple way to ensure that an ML model is functioning as expected.

- It involves running a few basic tests on the model to check if it can produce the desired output.

- These tests can include checking the model’s accuracy on a small dataset, verifying that it can handle missing values or outliers, and ensuring that it can handle different input formats.

Level 2: Integration Testing and Unit Testing

- Integration testing involves testing the interaction between different components of an ML system.

- This can include testing how data is passed between different modules, how models are trained and evaluated, and how results are generated.

- Unit testing, on the other hand, focuses on testing individual components of the system, such as algorithms or data processing pipelines.

- Both integration and unit testing are essential for identifying and fixing bugs and ensuring that the system is functioning as a whole.

🔢 Data

- Testing ML systems also requires careful attention to data.

- It is important to ensure that the data used to train and test the model is representative of the real-world data that the model will encounter.

- This can involve data cleaning, data augmentation, and data validation.

- It is also important to consider the ethical implications of the data used, such as bias and privacy concerns.

🤖 Models

- When it comes to testing ML models, there are several approaches that can be used.

- One common approach is to use test datasets that are separate from the training data.

- These datasets should be representative of the real-world data that the model will encounter and should be used to evaluate the model’s accuracy, precision, recall, and other performance metrics.

- Other approaches include stress testing, where the model is tested under extreme conditions, and adversarial testing, where the model is tested against intentionally crafted inputs designed to deceive it.

Post-train tests

- Post-train tests are used to ensure that an ML model is still performing as expected after it has been deployed.

- These tests can include monitoring the model’s performance over time, testing how it handles new data, and verifying that it is still accurate and reliable.

- It is important to have a robust monitoring system in place to catch any issues that may arise and to ensure that the model is always performing at its best.

Production

- Once an ML model has passed all the necessary tests, it is ready for production.

- However, testing does not stop here. It is important to continue monitoring the model’s performance in production and to have a plan in place for handling any issues that may arise.

- This can include automatic failover systems, backup models, and human oversight.

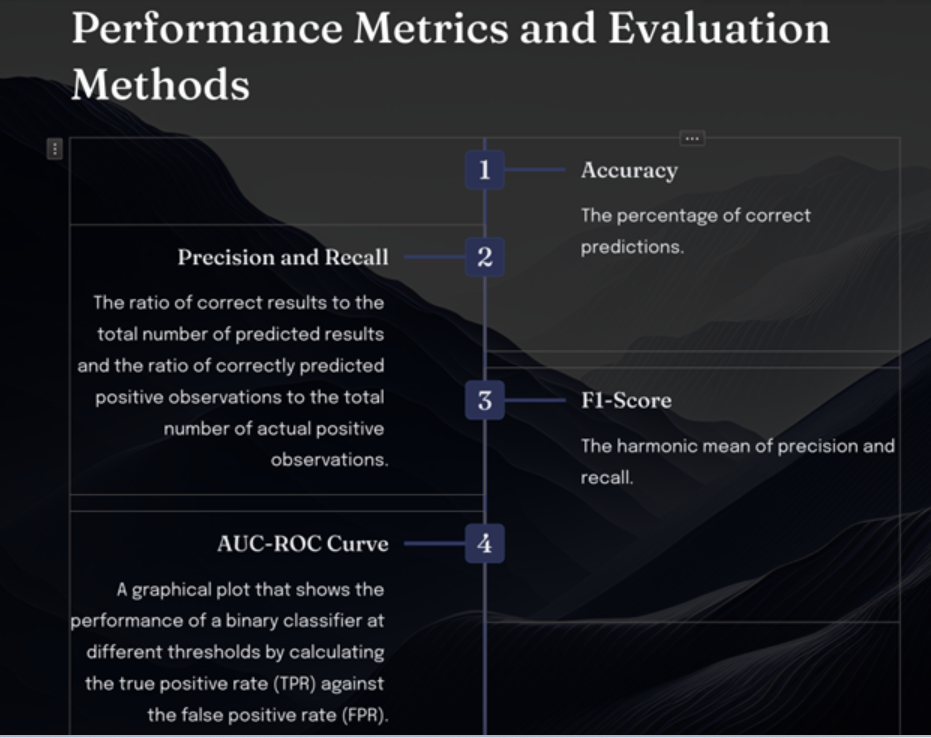

ML model testing : performance metrics and evaluation method

- Machine learning model testing is essential to ensure the model is performing as expected and can generalize to unseen data.

- Performance metrics such as accuracy, precision, recall, and F1 score can be used to evaluate the model.

- The evaluation method will depend on the specific machine learning task.

- Ready to explore AI ML testing strategy or want to hire expert AI ML testers, contact us.

Conclusion

- Testing is an essential part of developing and deploying ML systems.

- By following best practices and using a comprehensive testing framework, we can ensure that our models are accurate, reliable, and safe for use in real-world applications.

- At our company, we are committed to providing the best digital solutions for AI ML use cases and GPT/LLM Apps, and we believe that proper testing is a key part of achieving that goal.

aiTest: A Closer Look

aiTest an all-in-one testing platform. This platform is designed to conduct comprehensive testing on your applications concurrently. It supports cross-browser and browser version testing, as well as functional and performance tests integrated with Analytics. Additionally, the platform incorporates automation features, including an LLM for effortless generation and testing of machine learning models and generating test data for the same.One-stop solution for continuous testing, integrating seamlessly with CI/CD pipelines. With support for multiple languages and specialized testing for AI/ML services, aiTest empowers organizations to speed up their release cycles while ensuring quality and reliability.